Asma Alotaibi, Ahmed Barnawi, and Mohammed Buhari | Department of Information Technology, King Abdulaziz University.

Cloud computing is the most popular computing paradigm that offers its resources over the Internet. Cloud computing provides many advantages to end users, such as lower cost, high reliability, and greater flexibility. However, it has some drawbacks, which include a high latency, necessitating Internet connectivity with high bandwidth and security [1].

During the last few years, a new trend of Internet deployments emerged called the Internet of Things (IoT) that envisions having every device connected to the Internet. Its applications include e-healthcare, a smart grid, etc. Those applications require low latency, mobility support, geo-distribution, and user location awareness. Cloud computing appears to be a satisfying solution to offer services to end users, but it cannot meet the IoTs’ requirements. As a result, a promising platform called fog computing is needed to provide the IoTs’ requirements; fog computing was proposed by Cisco in 2012 [2].

Fog computing is a concept that extends the paradigm of cloud computing to the network edge, allowing for a new generation of services [3]. Fog computing has an intermediate layer located between end devices and the cloud computing. This leads to a model with a three-layer hierarchy: Cloud-Fog-End Users [4]. The goal of fog computing is to offer resources in a close vicinity to the end users. As in Figure 1, each fog is located at a specific building and offers services to those inside the building [4]. Fog computing supports low latency, user mobility, real-time applications, and a wide geographic distribution. In addition, it enhances the quality of services (QoS) for end users. These features make the fog an ideal platform for the IoTs [5].

Figure 1: Fog computing supports low latency, user mobility, real-time applications, and a wide geographic distribution.

Support of location awareness is the key difference between the cloud environment and the fog environment. Cloud computing serves as a centralized global model, so it lacks location awareness. In contrast to cloud computing, fog devices are physically situated in the vicinity of end users [6].

Data sharing has great importance for many people, and it is an urgent need for organizations that aim to improve their productivity [7]. Currently, there is an urgent need to develop data sharing applications, especially for mass communications, where the data owner is responsible for delivering shared resources to a large group of users. This one (data owner) to many (users) method needs special care, taking into consideration the challenges related to such applications. The main problems for such applications are issues related to security and privacy [8].

Like cloud computing, fog computing faces several security threats for data storage; to meet them, there are security features that were provided in the cloud environment. These security features are the enforcing of fine-grained access control, data confidentiality, user revocation and collusion resistance between entities [9].

In their research paper, authors proposes an architecture for data sharing in a fog environment. They explored the benefits brought by fog computing to address one-to-many data sharing application.

There are four parties in the proposed system - Data Owner, Cloud Servers, many Fog Nodes and Data users.

Data Owner (DO) has the right to access and alter the data. He encrypts the data with the attributes of a specific group and generates the decryption keys for users. Then, he uploads the encrypted data to the cloud servers.

Cloud Server (CLD) is responsible for data storage and deploys the data to the fog nodes.

Fog Nodes (FNs) are responsible for data storage and for addressing users’ requests. They are considered as a semi-trusted party. They execute operations of user revocation phase.

Data Users (Us) are those who request data access when they have the rights to access data. This means, only when the user’s access policy satisfies the data attributes.

The fog environment scenario is shown in Figure 2, where a DO encrypts a data file and then outsources it to a CLD for storage. Then, the CLD deploys the data file to the specific fog node via the data distribution protocol, as will be shown later. Fog nodes are geographically distributed within a specific domain, and they have fixed locations. The user can be moving, and he is requesting the data from the fog node closest to him. The fog node receives the user’s request and delivers the file to the user. The DO can delegate most of the tasks to the home fog nodes, as shown in the following section. In fog-based data sharing model, fog nodes and the data owner both can be connected to the cloud via the Internet. The fog nodes are connected to each other via a wired network over the Internet. The users can be connected with the fog node using a wireless connection technique such as Wi-Fi, as shown in Figure 2.

Figure 2: The fog environment scenario

This model consists of groups of users, and each group has a set of attributes and a basic location. Each group has many users who share the same attributes. One of the group attributes refers to its location, and group members connect with a fog that has the same location. The data owner assigns many files to each group on the basis of the attributes and needs of its members.Each fog node serves one group and is independent of its operation, so it is not affected when a user is revoked from another group. Therefore, the proposed revocation mechanism requires the re-encryption of the affected files and the updating of the secret keys, only for one group.

Data Distribution Protocol

Two types of fog in the data distribution architecture are defined:

Home Fog (HF): the fog has the same location as the user’s original location, where users are most likely to be found. It stores the user’s data and manages the processes.

Foreign Fog (FF): the fog is located away from the user’s original location, where the user is currently residing, as shown in Figure 3.

Figure 3: Data Distribution Architecture - The Foreign Fog and Home Fog

The proposed system is comprised of two kinds of data centers:

Cloud data centers (which includes the data centers for each group).

Local fog data centers

Each fog node is considered the “Home Fog” for the group that has the member’s same location, while it is considered the “Foreign Fog” for the other groups.

A local data center is a fog storage that holds copies of secret files. It is preloaded with the data required by fog users. The fog nodes maintain communication with the cloud. The data sharing between the cloud data center and each fog node data center is performed through immediate synchronization based on the unicast method.

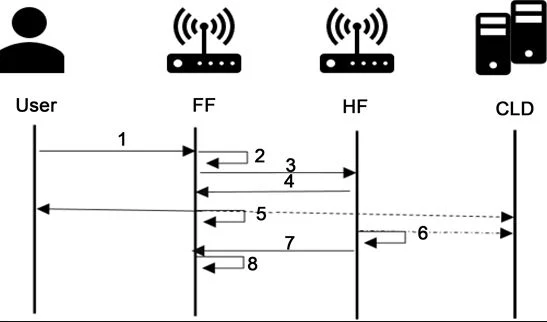

When the user requests a file from the fog node, if the fog is the user’s HF, the fog node directly sends the file to the user. If the user is away from his/her HF, the case is processed, as shown in Figure 4.

Figure 4. Data distribution protocol

1) Using authentication, a user logs to the fog node closest to him. He requests to join to it and identifies the period of the joined fog node through the registration process.

2) The FF recognizes the user’s home by the system user list (the cloud updates this list whenever a user is added or removed and sends it to all fog nodes via broadcasting after each update. This list includes the user’s ID and its own HF. (Note: the HF of each user is fixed).

3) The FF sends the joining message with the specified period to the user’s HF.

4) The HF sends an acceptance reply to acknowledge the joining.

5) The FF accepts the user as a visitor, updates its visitor list, and then synchronizes the list with the cloud.

6) The HF updates the location of its own users by changing the user’s location to the FF’s location and synchronizing it with the cloud. This table does not include the visitor’s users; it is only for its group members.

7) The HF sends the user’s secret data to the FF.

8) The FF stores the data in its data center.

If the time expires and the user is still at the FF, he must join the FF again. When the user returns to his HF, he will send a de-joined request to the HF and inform it that he is at his HF. The FF updates the current location table and synchronizes the table with the cloud.

The proposed framework rests on a combination of previous approaches that provide secure data sharing in cloud computing, such as Attribute-Based Encryption (ABE)^ and Proxy Re-Encryption (PRE)^^ techniques. According to the researchers - Unlike the previous system [9], the proposed revocation mechanism does not necessitate the re-encryption of all system files and updating of all secret keys. The proposed system provides real-time data sharing to group members.

"Our work will focus on providing an ideal environment for secure data sharing in a fog environment to overcome the disadvantages of a cloud-based data sharing system, which includes a high latency, requiring Internet connectivity with high bandwidth and lacking location awareness."

- Asma Alotaibi, Ahmed Barnawi, and Mohammed Buhari

Such architecture is sought to outperform the cloud-based architecture and ensure further enhancements to system performance, especially from the perspective of security. The proposed framework provides high scalability, and sharing of data in real time with low latency.

The findings of their study and research can be accessed at the following link which includes simulation results as well as other experimental results.

__________________________________________________________________________

^ Key Policy Attribute-Based Encryption (KP-ABE) - In KP-ABE, data have a set of attributes linked to data by encryption with the public key. Each user has an access structure that is an access tree associated with data attributes. The user’s secret key is a reflection of the user’s access tree; therefore, the user can decrypt a ciphertext if the data attributes to match his or her access tree.

^^ Proxy Re-Encryption (PRE) -PRE is a cryptographic primitive that allows a semi-trusted proxy to transform the cipher text of the encrypted data under the data owner’s public key into a different ciphertext under the group member’s public key. The semi-trusted proxy server needs a re-encryption key sent by the data owner for a successful conversion process, and it is unable to discover the underlying plaintext of the encrypted data. Only an authorized user can decrypt the ciphertext.

__________________________________________________________________________

Details about the Proposed Framework:

This article is an excerpt taken from Journal of Information Security Vol.08 No.03(2017), Article ID:77650,20 pages DOI: 10.4236/jis.2017.83014 Attribute-Based Secure Data Sharing with Efficient Revocation in Fog Computing by Asma Alotaibi, Ahmed Barnawi, Mohammed Buhari, Department of Information Technology, King Abdulaziz University, Jeddah, Saudi Arabia.

References:

[1]. Firdhous, M., Ghazali, O. and Hassan, S. (2014) Fog Computing: Will It Be the Future of Cloud Computing. Proceedings of the 3rd International Conference on Informatics & Applications, Kuala Terengganu, Malaysia, 8-15. [Citation Time(s):1]

[2]. Bonomi, F., Milito, R., Zhu, J. and Addepalli, S. (2012) Fog Computing and Its Role in the Internet of Things. Proceedings of the First Edition of the MCC Workshop on Mobile Cloud Computing, Helsinki, Finland, 17 August 2012, 13-16. https://doi.org/10.1145/2342509.2342513 [Citation Time(s):1]

[3]. Stojmenovic, J. (2014) Fog Computing: A Cloud to the Ground Support for Smart Things and Machine-to-Machine Networks. Australasian Telecommunication Networks and Applications Conference (ATNAC), Southbank, VIC, 26-28 November 2014, 117-122. https://doi.org/10.1109/atnac.2014.7020884 [Citation Time(s):1]

[4]. Luan, T., Gao, L., Li, Z., Xiang, Y., We, G. and Sun, L. (2016) A View of Fog Computing from Networking Perspective. ArXivPrepr. ArXiv160201509. [Citation Time(s):2]

[5]. Dastjerdi, A., Gupta, H., Calheiros, R., Ghosh, S. and Buyya, R. (2016) Fog Computing: Principals, Architectures, and Applications. ArXivPrepr. ArXiv160102752. [Citation Time(s):1]

[6]. Yi, S., Hao, Z., Qin, Z. and Li, Q. (2015) Fog Computing: Platform and Applications. 2015 3rd IEEE Workshop on Hot Topics in Web Systems and Technologies (HotWeb), Washington DC, 12-13 November 2015, 73-78. https://doi.org/10.1109/hotweb.2015.22 [Citation Time(s):1]

[7]. Scale, M. (2009) Cloud Computing and Collaboration. Library Hi Tech News, 26, 10-13.https://doi.org/10.1108/07419050911010741 [Citation Time(s):1]

[8]. Thilakanathan, D., Chen, S., Nepal, S. and Calvo, R. (2014) Secure Data Sharing in the Cloud. In: Nepal, S. and Pathan, M., Eds., Security, Privacy and Trust in Cloud Systems, Springer, Berlin, Heidelberg, 45-72. https://doi.org/10.1007/978-3-642-38586-5_2 [Citation Time(s):1]

[9]. Yu, S., Wang, C., Ren, K. and Lou, W. (2010) Achieving Secure, Scalable, and Fine-Grained Data Access Control in Cloud Computing. 2010 Proceedings IEEE INFOCOM, San Diego, CA, 14-19 March 2010, 1-9. https://doi.org/10.1109/infcom.2010.5462174 [Citation Time(s):9]